Michael Malice

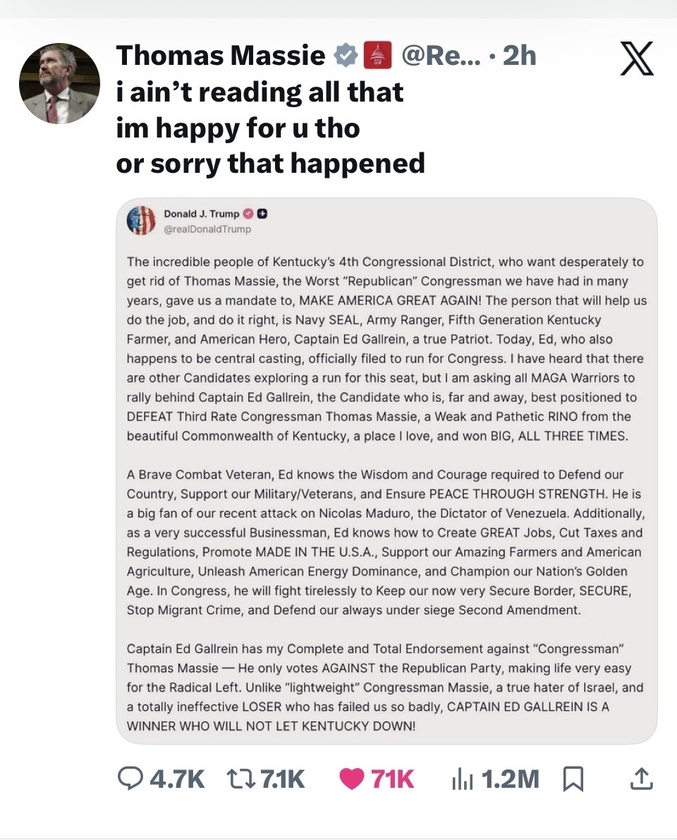

We are here for the sick burns and the LULZ.

Interested? Want to learn more about the community?

What else you may like…

Videos

Posts

I had a conversation with an LLM where I asked it a question about a controversial topic. When it gave a reply I thought was wrong, I implied as much in my next prompt, so its next reply better matched my beliefs! This proves that my opinion was correct the whole time and is not a reflection of what LLMs are actually designed to do.